Hello guys, I am Cuong Vu Duc, a Backend Software Engineer Intern at CyberAgent Hanoi DevCenter. In this post, I would like to introduce GitHub Action & PipeCD integration for AWS SageMaker Pipeline continuous integration and delivery. This was one of my tasks during the internship, as I was in charge of designing and implementing the MLOps platform.

Background

Most of my team’s infrastructure is hosted in AWS, so we chose SageMaker as the backbone of our machine learning platform. This enables us to utilize the full range of Amazon’s cloud ecosystem and ensure optimal performance. As part of this setup, we are constructing ML workflows with SageMaker Pipeline and developing with SageMaker Python SDK. GitHub Action & PipeCD are our primary tools to ship the new ML features to the production environment automatically.

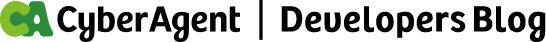

Currently, we bootstrapped two AWS Elastic Container Service (ECS) clusters, one for hosting PipeCD Agents and the other for deploying the backend services. The new ECS standalone task for creating and launching SageMaker Pipeline will be triggered within the backend cluster. The overall architecture can be shown in the diagram below:

In short, the GitHub Action will be activated when developers commit changes to the remote repository to build and promote new Docker image versions. Then, PipeCD, with ECS as the runtime environment for the Docker image, will create the standalone task for updating and executing the AWS SageMaker Pipeline.

CI/CD Workflow Configuration

Dockerize the Pipeline Image

First, prepare a Dockerfile to create the virtual environment, install dependencies, and pack the pipeline code base as a Docker image. Since this pipeline image intends to execute only SageMaker SDK, there is no need to include the packages for data processing or ML experimenting. Combined with the multi-stage build technique, this helps to minimize the size of the Docker image, as a result, speeding up the CI process.

# pipeline/Dockerfile

FROM python:3.10.16 AS build

RUN python -m venv /venv

# build stage

FROM build AS build-venv

ENV PATH="/venv/bin:$PATH"

RUN pip install sagemaker

# final stage

FROM python:3.10.16-slim

COPY --from=build-venv /venv /venv

COPY . /app

WORKDIR /app

CMD ["/venv/bin/python3", "run_pipeline.py"]Distribute Image & Trigger PipeCD Actions

Next, use the docker/build-and-push action to automate the building and pushing processes. Based on the Docker Registry providers, setting up the credentials beforehand can be required to have proper permission to distribute the newly built image. As we are using a private AWS Elastic Container Registry (ECR), it is recommended to implement the OpenID Connect. This allows securely passing the necessary role to the CI runner instead of storing a long-live secret within a third-party platform like GitHub.

# .github/workflows/distribute_pipeline.yml

jobs:

container-images:

runs-on: ubuntu-latest

permissions:

contents: read

steps:

...

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: "your-ecr-push-role-arn"

- name: Login to Amazon ECR

uses: aws-actions/amazon-ecr-login@v2

- name: Extract metadata (tags, labels) for Docker

id: meta

uses: docker/metadata-action@v5

with:

images: "your-image-arn"

- name: Build and push server container image

uses: docker/build-push-action@v6

with:

context: .

push: true

platforms: linux/amd64

tags: ${{ steps.meta.outputs.tags }}

file: pipeline/Dockerfile

Afterward, send the deploy event to PipeCD with the pipe-cd/actions-event-register action to trigger the CD flow.

# .github/workflows/distribute_pipeline.yml

jobs:

container-image:

outputs:

version: ${{ steps.meta.outputs.version }}

...

send-deploy-event:

runs-on: ubuntu-latest

needs: [container-images]

env:

version: ${{ needs.container-images.outputs.version }}

steps:

- uses: pipe-cd/actions-event-register@v1

with:

event-name: sm-pipeline-pipecd-image-update

labels: app=sm-pipeline-pipecd

data: "your-image-arn:${{ env.version }}"

pipectl-version: v0.50.0

...

PipeCD App & ECS Task Definition

On the PipeCD side, we need to configure the ECS App to deploy the newly built Docker image. This app utilizes the ECS standalone task, so make sure the taskdef.yaml is also included in the PipeCD app folder. The taskdef.yaml file defines the ECS task’s specifications, such as the container image, resource requirements, and other runtime configurations. The event watcher serves as the bridge between CI/CD processes. It receives the change events triggered by the CI pipeline and automatically updates the taskdef.yaml file. By committing the updated file to the repository, the event watcher ensures that the ECS task uses the latest built image version, effectively synchronizing the CI/CD workflows.

---

# pipecd/app.pipecd.yaml

apiVersion: pipecd.dev/v1beta1

kind: ECSApp

spec:

name: sm-pipeline-pipecd

labels:

layer: sm-pipeline-pipecd

input:

taskDefinitionFile: taskdef.yaml

clusterArn: "your-ecs-cluster-arn"

awsvpcConfiguration:

securityGroups:

- sg-0

subnets:

- subnet-0

- subnet-1

description: The AWS SageMaker Pipeline CD with PipeCD

eventWatcher:

- matcher:

name: sm-pipeline-pipecd-image-update

labels:

app: sm-pipeline-pipecd

handler:

type: GIT_UPDATE

config:

commitMessage: "Upgrade sm-pipeline-pipecd image to the latest version"

replacements:

- file: taskdef.yaml

yamlField: $.containerDefinitions[0].image

---

# pipecd/taskdef.yaml

family: sm-pipeline-pipecd

networkMode: awsvpc

taskRoleArn: "your-task-role-arn"

executionRoleArn: "your-execution-task-role-arn"

cpu: 256

memory: 512

requiresCompatibilities:

- FARGATE

containerDefinitions:

- name: sm-pipeline-pipecd

image: "your-published-image-on-ecr"

essential: true

entryPoint:

- /venv/bin/python3

- run_pipeline.py

...Results

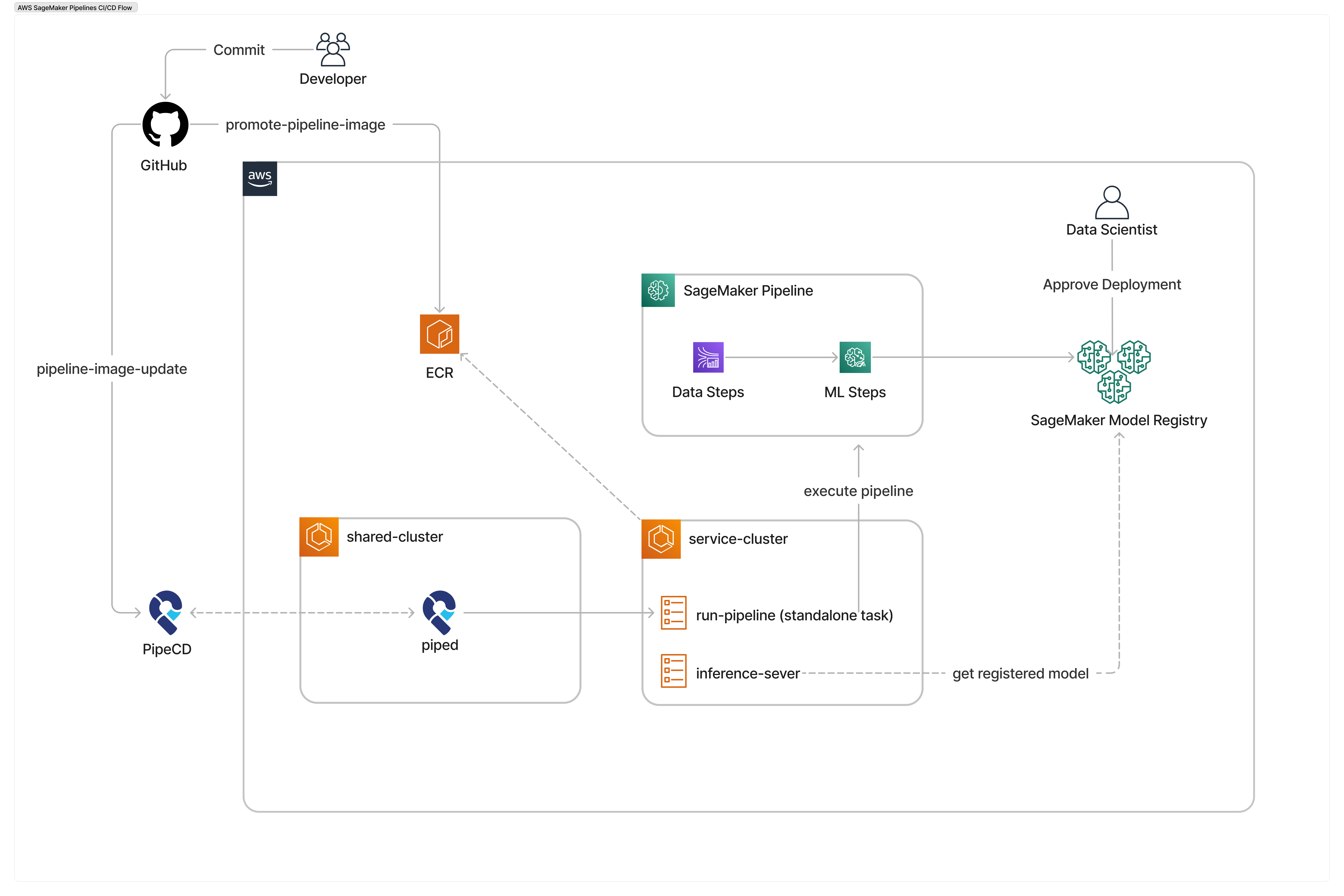

When the CD workflow is triggered on PipeCD, it initiates a Quick Sync deployment for an ECS standalone task. This process will roll out the updated version of the task and ensure that the new version is deployed successfully. Once the Quick Sync deployment is initiated, the ECS standalone task begins running the updated version, allowing the changes to take effect in the runtime environment.

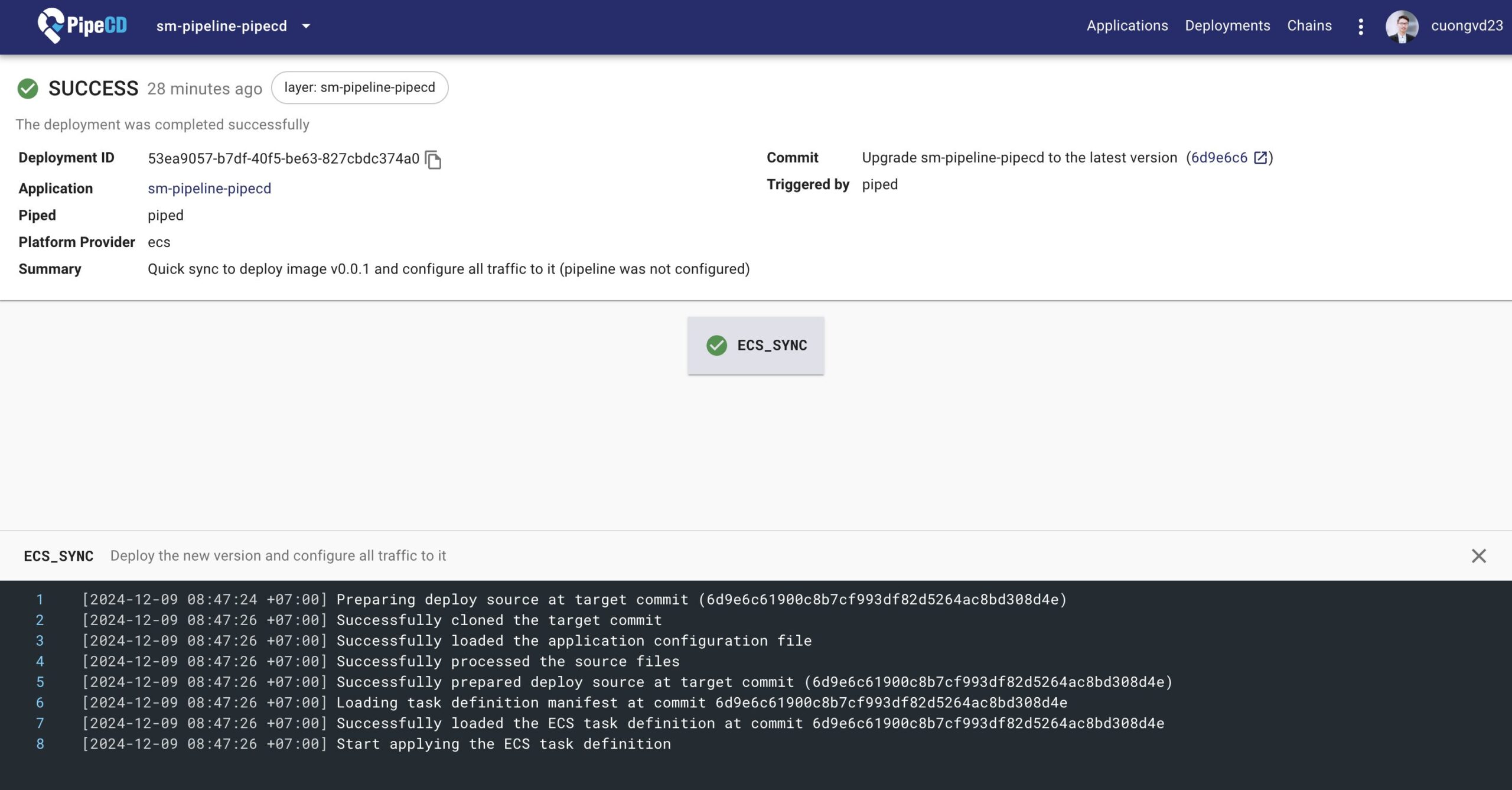

If the ECS task runs successfully, the pipeline’s directed acyclic graph (DAG) will be created and executed on SageMaker.

When the pipeline execution is finished, the model will appear in the Model Registry and be ready to be deployed to the inference server. However, due to the current limitation of PipeCD in synchronizing the status of ECS standalone tasks, I have yet to be able to automate the rollout flow of new ML models to the inference server.

Improvements

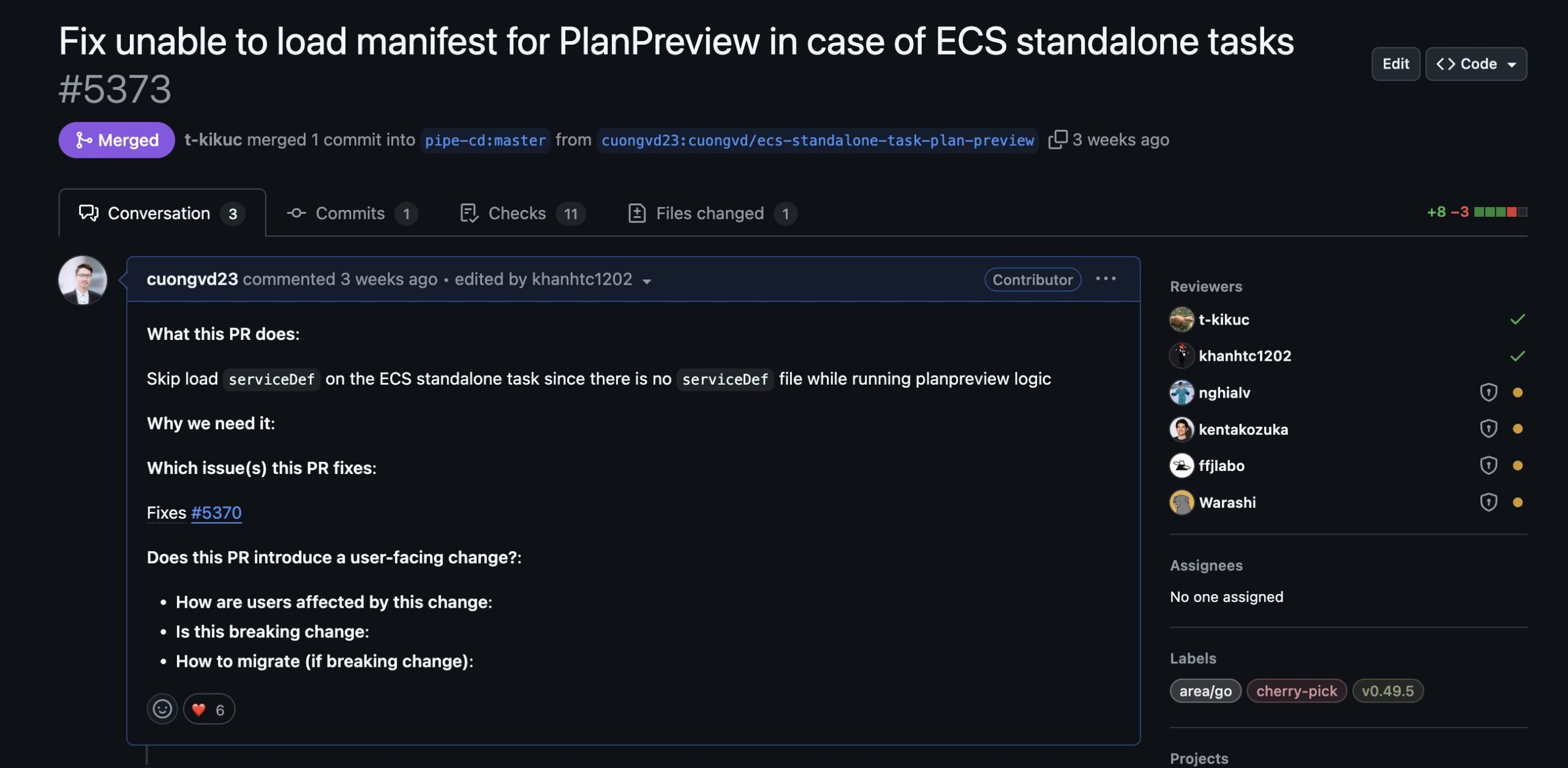

While working on this task, I encountered an issue in PipeCD related to the standalone ECS task my team was using. Although the bug only happened during the PlanReview stage of the CI process, where changes in the app configuration are displayed, it did not impact the subsequent execution. However, I am proud that my contribution to the PipeCD open-source project successfully resolved this issue. Furthermore, I intend to contribute to enhancing PipeCD’s ability to track the status of standalone ECS tasks. This improvement will not only benefit the CI/CD workflow for my team’s project but also be helpful to other PipeCD users.

Conclusion

In this article, I presented the CI/CD integration for SageMaker Pipeline on my team’s ECS cluster infrastructure. I can’t wait to see how PipeCD’s upcoming features allow us to completely automate the rolling out of new ML models to the inference backend server. I hope this blog will inspire new ideas for those who have been finding a way to integrate CI/CD tools with AWS SageMaker Pipeline.