Introduction

When building machine learning pipelines on AWS SageMaker, one of the biggest challenges we faced was the slow feedback loop. Executing directly in the cloud can be costly and time-consuming—especially when we just want to test preprocessing code or debug scripts.

That’s where SageMaker local mode comes in. It enables us to run training and inference workflows on our own machines using Docker, providing faster and more cost-effective iterations while remaining compatible with SageMaker’s cloud environment.

Local mode currently supports steps like Training, Processing, Transform, Model, Condition, and Fail. For simplicity, we’ll use the @step decorator in our examples to quickly turn Python functions into Training steps, without going deep into pipeline orchestration.

Prerequisites

SageMaker local mode relies on Docker containers to replicate the execution environment. This gives us the chance to integrate our development process with Docker Engine and keep everything reproducible. To get started, make sure your system has the following:

- Docker Runtime

If your system does not already have docker and docker compose commands available, you’ll need a container runtime:

- Docker Desktop – widely used, but note that it requires a paid license for enterprise use.

- Colima (open-source) – a lightweight alternative for macOS/Linux users.

- Python SageMaker SDK

Installing the SageMaker SDK with local mode support helps IDEs provide better auto-completion and suggestions when working with local mode:

pip install sagemaker[local]Executing SageMaker pipeline locally

Initializing SageMaker local session

To execute a pipeline locally, we need to inject the LocalPipelineSession into the sagemaker_session field of Pipeline. When we change it back to PipelineSession, it will be ready to be run flawlessly in the AWS-managed environment.

from sagemaker.workflow.function_step import step

from sagemaker.workflow.pipeline import Pipeline

from sagemaker.workflow.pipeline_context import LocalPipelineSession, PipelineSession

@step(display_name="step_name")

def step_function():

...

# SageMaker session

sagemaker_session = LocalPipelineSession() # or PipelineSession()

# Pipeline instance

pipeline = Pipeline(

...

steps=[step_function()],

sagemaker_session=sagemaker_session,

)

It’s very easy, isn’t it? So, how about we make this more convenient by simply changing the configuration to get a suitable sagemaker_session one?

class PipelineConfig:

s3_prefix: str

s3_bucket: str

s3_endpoint_url: str | None = None

is_local: bool = False

def get_session(config: PipelineConfig) -> Session:

if config.is_local:

return LocalPipelineSession(

s3_endpoint_url=config.s3_endpoint_url,

default_bucket=config.s3_bucket,

default_bucket_prefix=config.s3_prefix,

)

return PipelineSession(

default_bucket=config.s3_bucket,

default_bucket_prefix=config.s3_prefix,

)

# Pipeline instance

pipeline = Pipeline(

...,

sagemaker_session=get_session(config),

)

As you can see, with the PipelineConfig class, we can not only get_session by the configuration, but also reuse it to set up additional S3 parameters. Now it’s ready to start the SageMaker local mode according to the AWS documentation.

python run_pipeline.py --config-data=<base64-encoded-config>S3 connections

### Output logs

...

botocore.exceptions.ClientError: An error occurred (InvalidAccessKeyId) when calling the ListBuckets operation: The AWS Access Key Id you provided does not exist in our records.Oops, there is an error while connecting to the AWS S3, but why does it need to be connected to the S3 while running locally? The reason is that even in local mode, the SageMaker pipeline does require S3 to be correctly functioning. The difference lies in the session types. With LocalPipelineSession, we can configure parameters like s3_endpoint_url for local use cases. This means we need an S3-compatible storage to make local pipelines work seamlessly. At this point, docker came to save the day

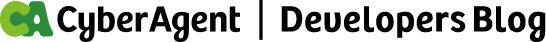

docker run -p 9000:9000 -d bitnami/minio:2025MinIO is a high-performance, S3-compatible object store. Thanks to docker, we can boot up a local container version of AWS S3 at http://localhost:9000. With this setup, we can have the overview flow for all components of local mode interacting with each other.

Let’s update the config s3_endpoint_url to see what comes next.

### Output logs

...

INFO [sagemaker.remote_function] Uploading serialized function code to s3://sagemaker-data/pipelines/step-name/function

INFO [sagemaker.remote_function] Uploading serialized function arguments to s3://sagemaker-data/pipelines/step-name/arguments

INFO [sagemaker.remote_function] Copied dependencies file at 'pipeline/requirements.txt' to '/var/folders/.../requirements.txt'

INFO [sagemaker.remote_function] Successfully uploaded dependencies and pre execution scripts to 's3://sagemaker-data/pipelines/step-name/pre_exec_script_and_dependencies'

INFO [sagemaker.remote_function] Copied user workspace to '/var/folders/.../temp_workspace/sagemaker_remote_function_workspace'

INFO [sagemaker.remote_function] Successfully created workdir archive at '/var/folders/.../workspace.zip'

INFO [sagemaker.remote_function] Successfully uploaded workdir to 's3://sagemaker-data/pipelines/sm_rf_user_ws/workspace.zip'

...

INFO [sagemaker.local.entities] Starting pipeline step: 'step-name'

...

sagemaker.remote_function.errors.ServiceError: Failed to read serialized bytes from s3://sagemaker-data/pipelines/step-name/function/metadata.json: ClientError('An error occurred (403) when calling the HeadObject operation: Forbidden')The serialized pipeline steps were successfully uploaded to S3 (MinIO), and execution began as expected. Nevertheless, the process failed due to a 403 (Forbidden) error from the S3 client. After digging into the SageMaker Python SDK, I discovered the root cause — the environment variable for configuring a custom S3 endpoint was incorrectly referenced. As a temporary workaround, we can apply a monkey patch (a runtime override technique) to bypass the issue until it’s properly fixed.

import sagemaker

sagemaker.local.image.S3_ENDPOINT_URL_ENV_NAME = "AWS_ENDPOINT_URL_S3"

### Output logs

...

sagemaker.remote_function.errors.ServiceError: Failed to read serialized bytes from s3://sagemaker-data/pipelines/step-name/function/metadata.json: EndpointConnectionError('Could not connect to the endpoint URL: "http://localhost:9000/sagemaker-data/pipelines/step-name/function/metadata.json"')After rerunning, the pipeline successfully picked up the correct custom endpoint for the local MinIO container. However, it then failed with a new EndpointConnectionError. Under the hood, SageMaker spins up Docker containers that mimic SageMaker’s execution environment. From the architecture diagram, we can see that the invoking-function container–created from step/docker-compose.yaml file–has been placed inside an isolated Docker network named sagemaker-local. This means that the MinIO server needs to be placed inside the same network in order to resolve the connection.

### step/docker-compose.yaml

networks:

sagemaker-local:

name: sagemaker-local

services:

algo-1-xxxxx:

container_name: xxxxxxxxxx-algo-1-xxxxx

environment:

- AWS_REGION=ap-northeast-1

- TRAINING_JOB_NAME=step_name-xxxx

- AWS_ENDPOINT_URL_S3=http://localhost:9000

image: sm-pipeline-pipecd:latest

networks:

sagemaker-local:

aliases:

- algo-1-xxxxx

Taking a look at the step/docker-compose.yaml generated by SageMaker SDK, we noticed that the sagemaker-local network is created dynamically at runtime. The problem with that approach is that we can’t fully control how the network is configured or ensure that other services (like MinIO) are attached to it ahead of time. To solve this, we decided to pre-create the network ourselves and then regenerate the step/docker-compose.yaml file to reuse that externally managed network. This way, all containers–both SageMaker step containers and storage service (MinIO)–can communicate reliably within the same network. Once again, a bit of monkey patching came to the rescue.

import sagemaker

class SageMakerContainer(sagemaker.local.image._SageMakerContainer):

def _generate_compose_file(

self,

command: str,

additional_volumes: list[str] | None = None,

additional_env_vars: dict[str, str] | None = None,

) -> dict[str, Any]:

content: dict[str, Any] = super()._generate_compose_file(

command, additional_volumes, additional_env_vars

)

if content.get("networks", {}).get("sagemaker-local"):

content["networks"]["sagemaker-local"]["external"] = True # marks as external network

docker_compose_path = os.path.join(

self.container_root or "",

sagemaker.local.image.DOCKER_COMPOSE_FILENAME,

)

yaml_content = yaml.dump(content, default_flow_style=False)

with open(docker_compose_path, "w") as f:

f.write(yaml_content)

return content

sagemaker.local.image._SageMakerContainer = SageMakerContainer

Recreating MinIO and attaching it to the sagemaker-local network, we realized that containers do not share localhost—inside Docker, localhost always points back to the container itself. The fix was to update the s3_endpoint_url to http://minio:9000, allowing step containers to reach MinIO by its DNS name on the shared network.

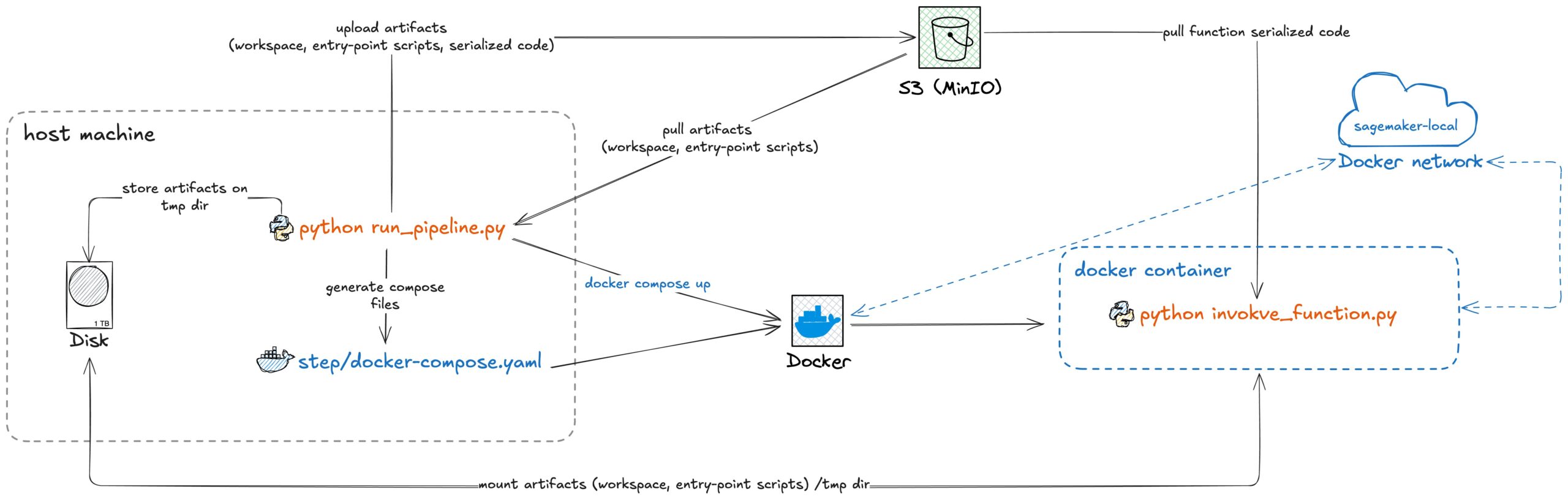

Docker container development workflow

Since our main script was running on the host machine, it could not resolve minio as a valid domain hostname. To address this, we packaged the main script into its own Docker image and adopted a containerized development workflow. This way, both the main script and sub-containers share the same network and can communicate reliably.

At this stage, we packaged the main pipeline runner script into its own container, with access to the host’s Docker socket. This allows the container to spin up sub-containers for each SageMaker local step. The final challenge was deciding on a volume mounting strategy that works for both the main container and its sub-containers. By default, SageMaker local mode uses the host’s /tmp directory for intermediate data. But mounting directly to the host’s /tmp raised security concerns. To solve this, we created a dedicated /tmp directory inside our project workspace instead. Then, we patched sagemaker.local.image._Volume.host_dir so that both the main container and all sub-containers share this same mount point. This way, all containers see the same temporary files, while keeping mounts isolated and secure within the project workspace.

import sagemaker

class ContainerVolume(sagemaker.local.image._Volume):

def __init__(

self, host_dir: str, container_dir: str | None = None, channel: str | None = None

) -> None:

if cwd := os.getenv("HOST_CWD"):

host_dir = cwd + host_dir

super().__init__(host_dir, container_dir, channel)

sagemaker.local.image._Volume = ContainerVolume

Combining all these changes, we can finally spin up the whole development flow with the following compose file:

services:

minio:

image: docker.io/bitnami/minio:2025

container_name: minio

ports:

- "9000:9000"

volumes:

- "minio_data:/bitnami/minio/data"

environment:

- MINIO_DEFAULT_BUCKETS=sagemaker-data

networks:

- sagemaker-local

pipeline:

build:

context: .

dockerfile: pipeline/Dockerfile

tags:

- sm-pipeline-pipecd:latest

volumes:

- /var/run/docker.sock:/var/run/docker.sock # share Docker daemon with host machine

- /Users/sagemaker/sm-pipeline-pipecd/tmp/:/tmp

container_name: sm-pipecd-pipeline

environment:

- HOST_CWD=${HOST_CWD} # /Users/sagemaker/sm-pipeline-pipecd

- CONFIG_DATA=${CONFIG_DATA}

command: >

python3 run_pipeline.py --config-data $CONFIG_DATA

networks:

- sagemaker-local

networks:

sagemaker-local:

name: "sagemaker-local"

driver: bridge

volumes:

minio_data:

name: "minio_data"

driver: local

And the step/docker-compose.yaml generated value has been patched as:

### step/docker-compose.yaml

networks:

sagemaker-local:

external: true

name: sagemaker-local

services:

algo-1-xxxxx:

container_name: xxxxxxxxxx-algo-1-xxxxx

environment:

- AWS_REGION=ap-northeast-1

- TRAINING_JOB_NAME=step_name-xxxx

- AWS_ENDPOINT_URL_S3=http://minio:9000

image: sm-pipeline-pipecd:latest

networks:

sagemaker-local:

aliases:

- algo-1-xxxxx

stdin_open: true

tty: true

volumes:

- /Users/sagemaker/sm-pipeline-pipecd/tmp/tmpmaaaaaaa:/opt/ml/input/data/sagemaker_remote_function_bootstrap

- /Users/sagemaker/sm-pipeline-pipecd/tmp/tmpzbbbbbbb:/opt/ml/input/data/pre_exec_script_and_dependencies

- /Users/sagemaker/sm-pipeline-pipecd/tmp/tmpyccccccc:/opt/ml/input/data/YYYY-MM-DD-hh-mm-ss-fff

Conclusion

In this blog, we addressed the key limitations of SageMaker local mode and demonstrated how to make it truly local. Through containerized workflows, patched volume mounts, and DNS-based networking, we created an environment where pipelines run end-to-end on a local machine without cloud dependencies. This not only accelerates iteration and reduces cost but also keeps the flexibility to switch back to the cloud by simply updating a configuration value. You can find the full example source code on GitHub cuongvd23/sm-pipeline-pipecd repository.