Hello,

This is Masaya Aoyama (@amsy810) and Mizuki Urushida (@zuiurs) from CyberAgent, Inc. We are software engineers who love Kubernetes and Cloud Native architecture and this post will show how we invented the Kubernetes-native testbed platform.

This is published as OSS on kubernetes-native-testbed/kubernetes-native-testbed for growing and spreading knowledge with everyone in the community.

As of the release date, the total commit number was near 900. Today, Kubernetes and its surrounding ecosystems are growing rapidly and convenient tools and middleware come out everyday. While this is a good thing, many people may get confused with which middleware to choose and how to integrate with their applications. For those people who have the problem, we have developed this testbed including various microservices using cloud native middleware so that they can try the actual behavior and consider how they can operate those software.

Example patterns are available in the repository and you can try it out by modifying the existing manifests or adding something new. In addition, this testbed makes full use of Kubernetes’ CRD and Operator functions and uses many Kubernetes-native methods to entrust middleware management to Kubernetes. Please experience the cutting-edge method.

In this article, we will explain how to use this testbed, its architecture, the operation and implementation of the middleware used.

Middleware on testbed

The middleware associated with cloud native can be found on the Cloud Native Interactive Landscape page by CNCF (Cloud Native Computing Foundation). Since there is a lot of middleware, various people who have seen it should feel like “I don’t know what to use” as described in the introduction.

Fortunately, because we have been in the Kubernetes community for a long time, we know hot topics from KubeCon and other cloud-native conferences and the adoption status of CNCF projects. Based on the knowledge, we decided to pick up middleware, which is often used with Kubernetes, and run them on this testbed.

However, it is not enough to select cloud-native middleware. “Cloud Native” refers to systems that are characterized by ease of management, observability, looseness, and resilience, so if you try to run middleware on Kubernetes without Operator, you will spend a lot of time from creating resources to its operation. Therefore, this testbed makes maximum use of Operators (custom controllers) that automatically handle such problems.

Kubernetes CRD and Operator

Let us explain it in more detail. Kubernetes has a mechanism called CRD (Custom Resource Definition) and Operator. CRD is a feature that allows Kubernetes to define unique resources other than the resources that it can handle by default. For example, you can create something like the MysqlCluster resource below.

apiVersion: mysql.presslabs.org/v1alpha1 kind: MysqlCluster metadata: name: product-db namespace: product spec: replicas: 2 secretName: product-db mysqlVersion: "5.7" backupSchedule: "0 0 */2 * *" backupURL: s3://product-db/

Operator is a program that actually processes resources created from this CRD. In the example above, the MySQL cluster will be created and operated automatically after the manifest is registered. A lot of systems based on Kubernetes now run in this way.

In the recent community, we have come to see the word “Kubernetes-native”, and we recognize that this phrase is broadly “built on the assumption of Kubernetes” and narrowly “definable and settable all in manifests using Kubernetes CRD,” as in the example above. This time, we designed the Kubernetes-native testbed in the narrow sense.

Kubernetes-native infrastructure

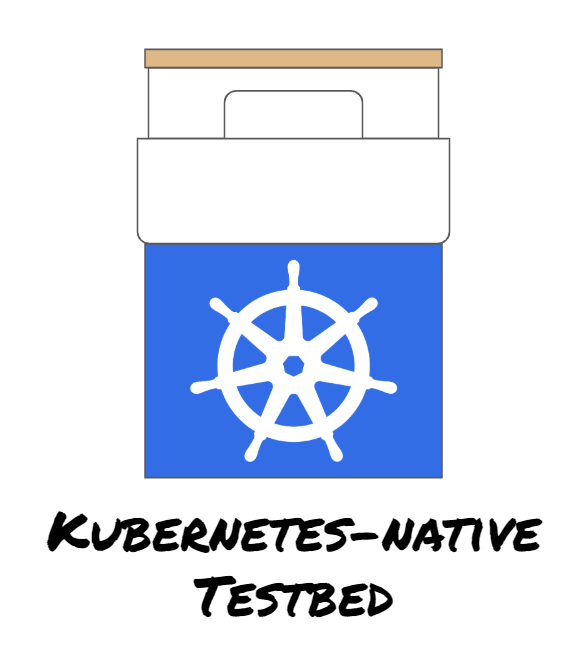

In this testbed, an e-commerce website will be provided as an example to incorporate all of the middleware described above. The website is easy for everyone to understand and has many components, so it is also intended to be used as microservices. Please look at the architecture diagram.

As you can see, everything runs on Kubernetes including CI/CD and Container Registry.

Rook (Ceph) is used for the storage infrastructure, and various stateful applications for the microservices run on it.

All communication between services is performed by gRPC except Message Queue. Furthermore, communication between the users and the L7 LoadBalancer (Contour) is also performed by gRPC (strictly gRPC Web).

There are 11 microservices and every service stores data on different middleware. The role of each service is not the essence of this project, so we will not explain it. However, you can understand what they are by the service name.

The following list shows the OSS used as middleware.

If you want to run the testbed in your environment or cloud, follow the instructions in the testbed repository.

kubernetes-native-testbed/kubernetes-native-testbed

GitOps

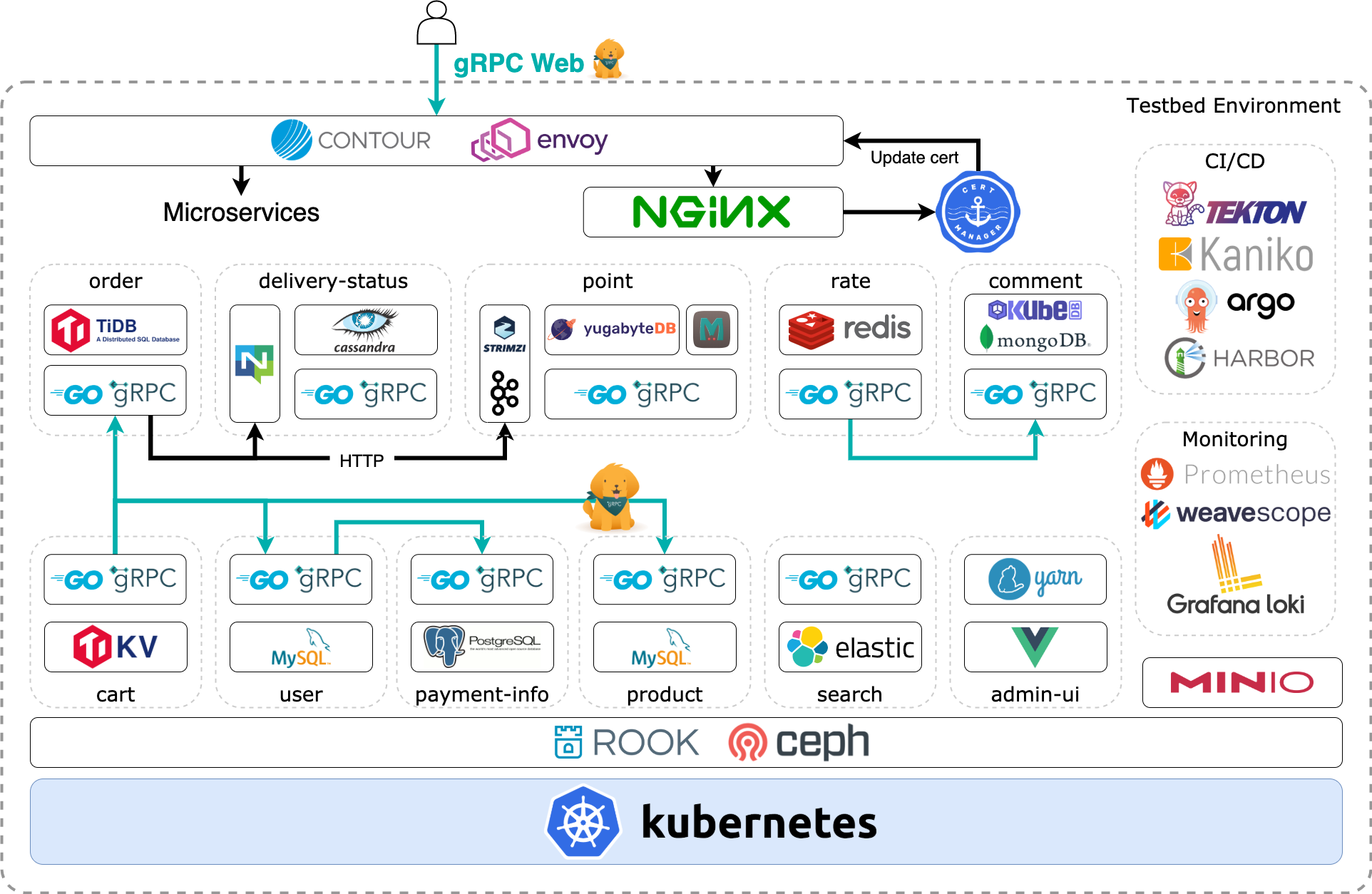

When we started this project, the first thing we thought we should prepare was the CI/CD environment. It is also described in the CNCF TrailMap. Modifying application deployments and infrastructure configurations are very challenging even in environments using Kubernetes. Build the container image and rewrite the manifest tag every time you modify the application. This is a very monotonous and boring task. By eliminating the work from the beginning, you can spend more time developing applications.

The following OSSs were selected for the Kubernetes-native CI/CD environment.

| OSS | Category |

|---|---|

| Tekton(Pipeline、Triggers) | Kubernetes-native CI tool |

| ArgoCD | Kubernetes-native CD tool |

| Harbor | Container registry for storing container images (CNCF Incubating project) |

| Clair | Vulnerability scanner for container image |

| Kaniko | Build container images on Kubernetes pods |

GitOps is known as the best practice of all CI/CD patterns in the Kubernetes environment so we have built a GitOps environment with the OSS described above.

Kubernetes-native local development

The testbed is too large, so we cannot run the whole system on your local machine. For local development, we provide the way by using Skaffold and Telepresence.

Kubernetes-native stateful middleware

Container technology is generally considered unsuitable for stateful applications, but it is expected that this perception will gradually change in the future with the help of operators. In addition, depending on the status of database failures, the operator can change the members of the endpoints or obtain a backup automatically, so we can entrust operation to the operator as instead of human. In other words, the Kubernetes Operator system can be used to program operational knowledge and delegate management to Kubernetes in accordance with their lifecycle. In this testbed, we are using Database, KVS, MessageQueue and Block/Shared/Object Storage with Kubernetes Operator.

gRPC and gRPC-web

This testbed uses gRPC for communication of each microservice. gRPC can describe APIs using IDL, so the specifications are very clear. This has the advantage of making it easier to implement server-side and client-side. So it’s a great match for microservices which are developed by some split teams.

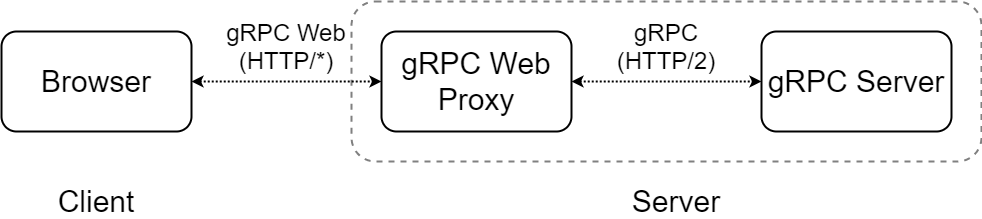

In addition, communication between browsers and microservices uses gRPC, too! This is strictly called gRPC-Web. The browser cannot issue gRPC requests due to some functions required for gRPC is not implemented. Therefore, gRPC-Web was proposed, this protocol close to gRPC.

gRPC-Web requires a proxy that converts gRPC and gRPC-Web to each other for a browser to communicate with the backend. Envoy, L7 Load Balancer, has this proxy feature and we use it in our testbed. Additionally, to make Envoy more Kubernetes-native, we use Contour as Envoy controller.

Release milestone

This testbed is still an alpha release, and we focus on:

- BETA-1 at JUNE 2020 (Kubernetes Community Day Tokyo)

- BETA-2 at SEP 2020 (CloudNative Days Tokyo 2020, Kubernetes Forum Tokyo)

- GA at KubeCon + CNCon NA 2020

- We are aiming to introduce this project at KubeCon + CNCon NA 2020.

We will prepare for more documentation for future releases.

For cloud providers

For Cloud Providers, we are looking for a sponsor to provide cloud environment. If you can help, please email us (kubernetes-native-testbed@googlegroups.com).

Summarize: One answer to realizing cloud native

In this testbed, we introduced the following three points, mainly using the Kubernetes-native ecosystem.

- CI/CD and development environment that can be released stably immediately after application development

- Automated stateful middleware by Kubernetes Operator

- High-Performance Interservice Communication with Full gRPC

By using these functions, we can continue to create services and provide new functions in a stable manner with minimal effort. We think this is one answer to the realization of cloud native.

Although some parts are still under development at this point, we believe that the Cloud Native world will be more popular in the future. I’d like you to experience the great world.

Note

- In the cloud-native era, not only applications but also the OSS that support them must be appropriately updated. For this reason, the OSS used in this testbed may be replaced by a different cloud-native product in the future. This article explains the product status as of April 9th, 2020. This testbed will keep evolving so please check out the develop branch for the latest status 🙂

- We also look forward to proposals and contributions 🙂

- The architecture of this project was designed with care, but several components were chosen by Middleware-Driven Development (MDD) for testing many components 🙂

For more detail

For more detail, you can see following Japanese blogs and we will update documentation for future releases.

https://employment.en-japan.com/engineerhub/entry/2020/04/16/103000

Authors

MasayaAoyama (@amsy810)

Masaya Aoyama is a software engineer at CyberAgent, Inc.

He is a co-chair at CloudNative Days Tokyo, and he also organizes Cloud Native Meetup Tokyo, Kubernetes Meetup Tokyo and Japanese meetup in KubeCon at the venue. He passed CKAD #2 and CKA #138, and he published several Kubernetes books.

Involved in Kubernetes for more than a few years, he contributes for Kubernetes. He has been implemented customized Kubernetes as a Service on the private cloud as a product owner.

Mizuki Urushida (@zuiurs)

Software Engineer at CyberAgent, Inc. I’m interested in autoscaler, such as cluster autoscaler, horizontal pod autoscaler. I recently developed custom HPA with forecasted scaling by machine learning. I love golang.