Hi, my name is Yusuke Goto (@ygoto3_ / 五藤 佑典). I am a front-end developer at AbemaTV. Currently, my role is to conduct research on the newest client-side and video technologies outside Japan, so I now live in San Francisco to collect the most accurate research possible. In this post, I would like to share some of these technologies that I have got around the city.

CyberAgent: A Video Company

CyberAgent has transformed into a video company in Japan. Of course, we always want hints of novel technologies to improve our video services and users’ experience. Consequently, we think that we should cover San Francisco, the city where superior engineers gather from all over the world to create innovative products, this is one of the reasons why I am here now.

Many Meetups are Held Everyday in San Francisco

When it comes to researching an extensive amount of data regarding new technologies, I would say that going to Meetups is a great technique; allowing yourself to get to know the best engineers in a particular industry increases your knowledge base. Meetups enable you to acquire a first-hand experience on related tech. You should take advantage of these opportunities at hand. Therefore, I try to go to as many Meetups as I can, especially when they are related to video, front-end, and design, which are my fields of engineering.

Since I arrived in San Francisco; late March this year, I have been to 20 Meetups and conferences:

- 3/27 Mon. : Full-stack Pair Programming

https://www.meetup.com/hackreactor/events/238229861/ - 3/29 Wed. : Foundry and Shotgun head to San Francisco & Los Angeles

http://blog.shotgunsoftware.com/2017/03/foundry-and-shotgun-head-to-san.html - 3/30 Thu. : Monthly Video Meetup – Going Holographic: how to extend video to VR and beyond

https://www.meetup.com/SF-Video-Technology/events/236633038/ - 4/3 Mon. : JS Study Group: Algorithms

https://www.meetup.com/hackreactor/events/238624743/ - 4/5 Wed. : WaffleJS – A fun night of code, waffles, and karaoke.

https://wafflejs.com/ - 4/6 Thu. : April SFNode at TuneIn

https://www.meetup.com/sfnode/events/230169908/ - 4/7 Fri. : SVG and Responsive Images with Sarah Drasner, Kitt Hodsden, and Eric Portis

https://www.meetup.com/sfhtml5/events/237831830 - 4/10 Mon. : Protonight – Pair Programming Hack Session

https://www.meetup.com/jsmeetup/events/233028422/ - 4/11 Tue. : Video 101: How to Make Online Video Work (Uploading,Transcoding, Delivery)

https://www.meetup.com/Synqathon-SF/events/238679432/ - 4/12 Wed. : Elm Hack Night

https://www.meetup.com/Elm-user-group-SF/events/238458384/ - 4/13 Thu. : Hands-On Python (Formerly Beginners’ Study Group)

https://www.meetup.com/PyLadiesSF/events/238581016/ - 4/17 Tue. – 4/19 Wed. : Facebook Developer Conference: F8

https://www.fbf8.com/ - 4/20 Thu. : Design Systems at Salesforce

https://www.meetup.com/San-Francisco-Design-Systems-Coalition/events/238279786/ - 4/22 Sat. – 4/27 Thu. : 2017 NAB Show

http://www.nabshow.com/ - 4/26 Wed. : NAB hls.js Happy Hour with Peer5

https://www.eventbrite.com/e/nab-hlsjs-happy-hour-with-peer5-su7607-tickets-32909147145 - 4/26 Wed. : DASH-IF Networking Event at NAB 2017

https://www.eventbrite.com/e/dash-if-networking-event-at-nab-2017-registration-33089614929 - 5/8 Mon. : Protonight – Pair Programming Hack Session

https://www.meetup.com/jsmeetup/events/234636252/ - 5/11 Thu. : Sonia Burney: Securing & Optimizing the Web with Client & Server-Side Solutions

https://www.meetup.com/SF-Web-Performance-Group/events/239308913/ - 5/16 Tue. : Intel Software – Day 0 Google I/O Party

https://www.eventbrite.com/e/intel-software-day-0-google-io-party-tickets-24570345565 - 5/17 Wed. – 5/19 Fri. : Google I/O 2017

https://events.google.com/io/

You have probably noticed that some of them do not relate to video, front-end, nor design. I believe it is of vital importance to expand your knowledge base to related fields as a beginner. Actually, San Francisco is a kind city for newbies; however, I would not like to get too deep into this topic here, so let’s pick up 6 topics about video technologies to introduce in this post. I am going to talk about Light Field technology, TiledVR, View Prediction, Content Adaptive Bitrate, CMAF, and DASH-IF.

Light Field Technology for VR

Regarding video, Virtual Reality (a.k.a. VR) is a hot topic here in San Francisco, too. Taking part in Meetups held by video-related communities, you will find people who have a high amount of interest in VR. Ryan Damm from Visby gave a presentation about how to make use of holography to deliver more realistic multi-perspective experience to users at one of Monthly Video Meetups. Their specialization is light field technology.

Light field is a different way of capturing and displaying a 3D object than polygon model. A light field camera is like the picture below:

It has a bunch of lenses to capture the vector information of light rays. While the VR device calculates a person’s view base on geometry by polygon model, it just pick the rays that a person would see.

However, light field needs a large amount of data to display a 3D object because light field information is made up of 4 dimensional data retrieved from tons of light rays; it is huge. Moreover, it cannot create any view if a light field camera has not captured light rays for the view.

In consequence, there is a more realistic way to apply light field technology to VR contents. You can combine light field data with polygon data. With this approach, you can only use light field data in order to overwrap the textures on polygon models and make them more realistic, so you do not need every light ray in a person’s view. Light field technology will play a great role as an option to make VR contents more real.

TiledVR

As I mentioned previously, VR with light field technology could cost a lot; nonetheless, even without light field data, 360 video itself is much heavier than normal video. Next, I will introduce TiledVR as a technology to reduce the payload size of a live 360 video content.

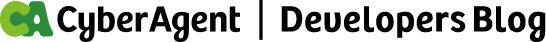

At 2017 NAB Show, Rob Koenen, a founder of Tiledmedia, introduced TiledVR. TiledVR makes it possible to stream very high quality 360 VR like UHD at realistic bitrates. Streaming 360 video costs much more than normal video because theoretically, we would need all the pictures surrounding a user even though the user can look only within his or her viewport at the moment. To simplify, let’s say the user only sees about 1/8th of the whole 360 panorama picture. Nevertheless, it leads to too much of a data payload if your server is sending a user every picture at high quality as UHD. TiledVR comes to the rescue here.

With TiledVR, you divide a 360 panorama picure into tiles which enable your server to send only the tiles within a user’s viewport at high quality. Additionally, your server has the option of sending the rest of the tiles at lower quality.

Now a viewer can watch a picture with a higher resolution efficiently as the VR device holds low resolution pictures outside the viewport so that he or she can turn his head to the back and sees at least some image there. It is better that the VR device fetches a higher resolution image by the time the viewer’s focus gets back normal from the motion of turning your head.

We will need technologies like TiledVR since people are not satisfied with VR contents unless the resolution of every image is as high as 4K or more. TiledVR is a way to make it possible even in realistic bandwidth.

View Prediction

Facebook has enhanced a way to stream the highest number of pixels to a person’s field of view like TiledVR in a more intelligent way. Facebook challenged to prioritize which area of the panorama picture a VR player should fetch at higher bitrate by predicting where a viewer will see the next in a 360 video.

Shannon Chen, David Pio, and Evgeny Kuzyakov described how their view prediction worked at F8 2017. Facebook developed 3 technologies to predict what a person is the most interested in:

- Gravitational predictor: A physics simulator calculates how a person’s focal point moves on the landscape constructed by the heatmap.

- Heatmaps generated by AI: Deep learning helps find the most interesting parts of a 360 video by generating heatmaps even when there is no heatmap yet and interesting parts are outside the viewport.

- Content-dependent streaming: A content is transformed in order to direct a view to an intended focal point calculated by the actual and AI-generated heatmaps so that the content can be delivered as a single stream. A single stream is able to be pre-buffered, so it can be smoothly played back event in low bandwidth.

They are smart but complex technologies, so you can check out the post “Enhancing high-resolution 360 streaming with view prediction” for more details.

Content Adaptive Bitrate

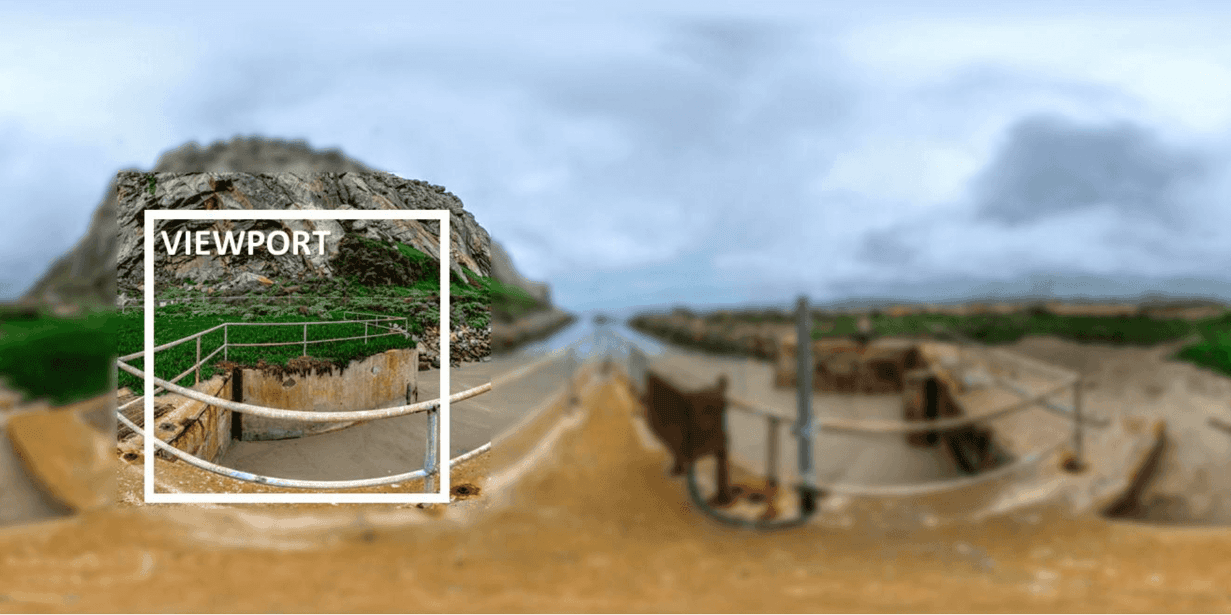

Meanwhile Facebook has developed a content-dependent approach to dynamic streaming technology, Beamr has developed another content-dependent approach. This is a encoding technology called content adaptive bitrate.

Content adaptive bitrate is of great use to reduce the size of video’s media files. Dror Gill, CTO of Beamr, introduced their own adaptive bitrate technology at 2017 NAB Show. Speaking of adaptive bitrate, we always provide adaptive bitrate streams for various devices to play back our contents with ease. Nonetheless, we conventionally prepare several streams ranging from lower to higher bitrates, while allowing the player to choose which stream to play back according to the bandwidth. This conventional adaptive bitrate lets smartphone devices with narrow bandwidths consume less file size.

Nevertheless, content adaptive bitrate is nothing like this. It leads to actual file size efficiency because content adaptive bitrate is an encoding technology to encode video with less bitrate. The technology lets your encoder adjust the bitrate frame by frame. It calculates the right bitrate for a frame based on the previous frame. As a result, your encoded video’s file size becomes much smaller even though its quality stays the same. According to Beamr, it can reduce 50% of bits comparing to normal encoders.

Content adaptive bitrate will cut down on the payload size and the storage space needed for your existing contents. The encoders are fully compliant to H.264 and H.265.

CMAF

If you think of reducing storage cost for media files, you wish that you could remove redundant media contents in multiple file formats. There is a possibility to stream both HLS and MPEG-DASH with single media files called CMAF: Common Media Application Format. These days many video streaming services are forced to use HLS and MPEG-DASH to deliver the same content to a variety of devices due to the different DRM systems and encryption modes that all the mainstream platforms support now. Speaking of the mainstream Web browsers, such as Google Chrome, Microsoft Internet Explorer, Edge, Mozilla Firefox, and Apple Safari, their supporting DRM systems and encryption modes differ:

- Chrome – Widevine/AES-CTR

- Internet Explorer/Edge – PlayReady/AES-CTR

- Firefox – Widevine/AES-CTR

- Safari – FairPlay/AES-CBC

which means that you need to encode the same content multiple times just to put it in different media containers. Therefore, we want CMAF.

Stefan Pham from Fraunhofer FOKUS described the current status of CMAF. CMAF is ISOBMFF based, so it can already be used as media segments for MPEG-DASH streaming. Moreover, CMAF can be used as those for HLS streaming now. Apple actually announced that they supported fragmented MP4, the parent of CMAF, with HLS at WWDC 2016. The most significant reason why we have supported HLS with MPEG-TS is that you want to make your service available for iOS users (An App on iOS must use HLS to play back video streaming content over a cellular network longer than 10 minutes), but now there is an option; you stream HLS with CMAF.

Also, CMAF does not mandate encryption scheme for Common Encryption as the Common Encryption specification has been updated to support AES-CBC. As a result, when there are more devices supporting CMAF with both AES-CTR and AES-CBC, it will be possible to stream encrypted contents as single media files. Actually, Google has already started supporting AES-CBC on the Chromecast and Android N devices. The industry might be converging toward AES-CBC.

If we are able to deliver CMAF files with both HLS and MPEG-DASH, we can reduce plenty of encoding time and storage usage. Nonetheless, its MPEG status is still “Draft International Standard.” We should see how things go.

DASH-IF

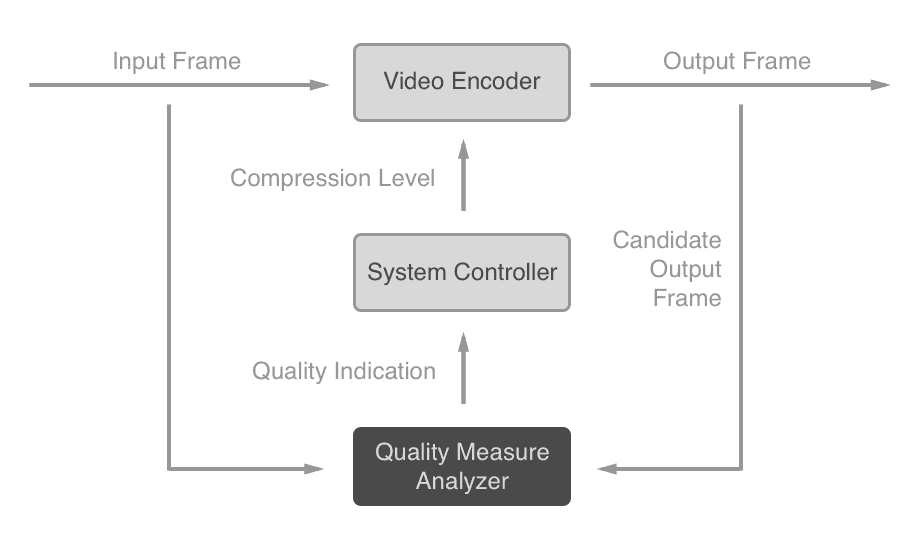

I would highly recommend going to a DASH-IF Networking Event, which is a Meetup held by DASH Industry Forum, if you are in the video streaming business because many engineers developing MPEG-DASH-related technologies gather. In fact, many great companies attended a DASH-IF Networking Event this April.

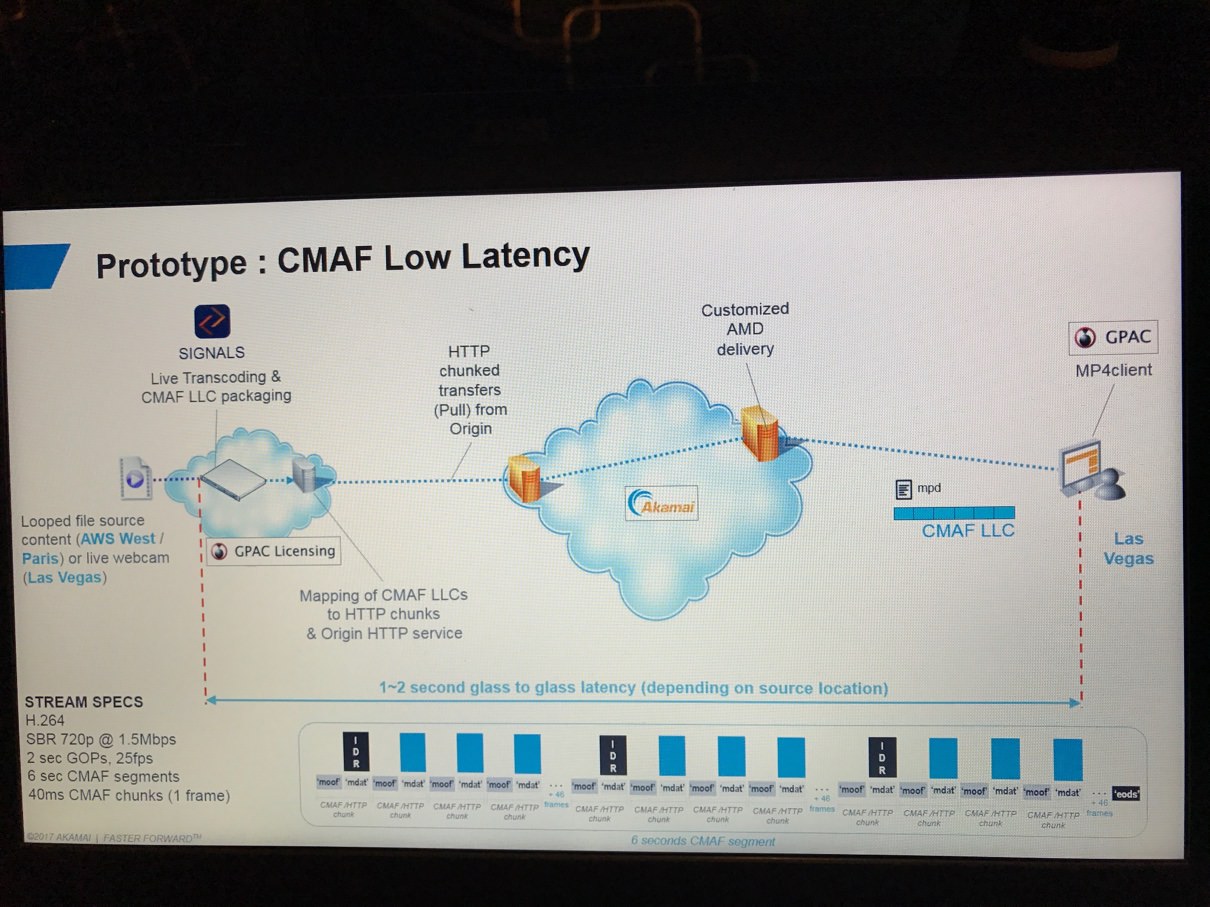

We saw very exciting technology at the Meetup. For instance, a very experimental technology was demonstrated by Akamai there. The experiment was about a low latency mode which is offered in CMAF. In CMAF, media samples can be packaged in smaller chunks so that encoded chunks can be available at more points, which lessens the latency of live streaming. The demonstration showed that a source in Paris was live-transcoded and delivered to the venue.

You also are encouraged to interact with people there about your current problems that you may have. Engineers from THEOplayer and Bitmovin attended the event this year. Both the companies develop great HTML5-based players, so it was an excellent opportunity to ask for their advice. I conversed with some people about how to protect VR contents in Web browsers with the current DRM technologies. AbemaTV protects normal video contents using Content Decryption Modules, a.k.a. CDM, and Encrypted Media Extensions, a.k.a. EME, in Web browsers. However, you cannot correctly play back VR content with CDM such as those of Microsoft PlayReady and Google Widevine since the pictures need to be somehow bent to create the VR illusion after a CDM decrypts them. You cannot make a change to the pictures after their decryption in Web browsers.

THEOplayer, which develops a VR SDK for their HTML5 video player, gave me some advice that you could implement a custom DRM module with EME and ClearKey to protect your contents. Although it does not meet the Hollywood-level standard, it is one way to protect them. There will be a way to fit the norm in the future, but we can share how we can do with the current technology with those prominent companies in the MPEG-DASH industry.

Besides, DASH-IF Networking Event was a netwoking event, so we had a pretty good time with some drinks, too!

Conclusion

In conclusion, so far Meetups and conferences around San Francisco and other places in the United States have been excellent opportunities to find technologies that are new to us, AbemaTV. In addition, there will be more of new technologies coming. Of course, adapting a new technology does not always lead to a good result, but it is worth trying when getting in a new industry, which to us, is the video industry. My days of research are still ongoing; however, I will do my absolute best to be able to share interesting information soon with you. I hope I will see you again.